Overview

Learn the basic commands that allow users to create, manage, and delete Kubernetes clusters using CSE on this page. The primary tool for these operations is the vcd cse client command.

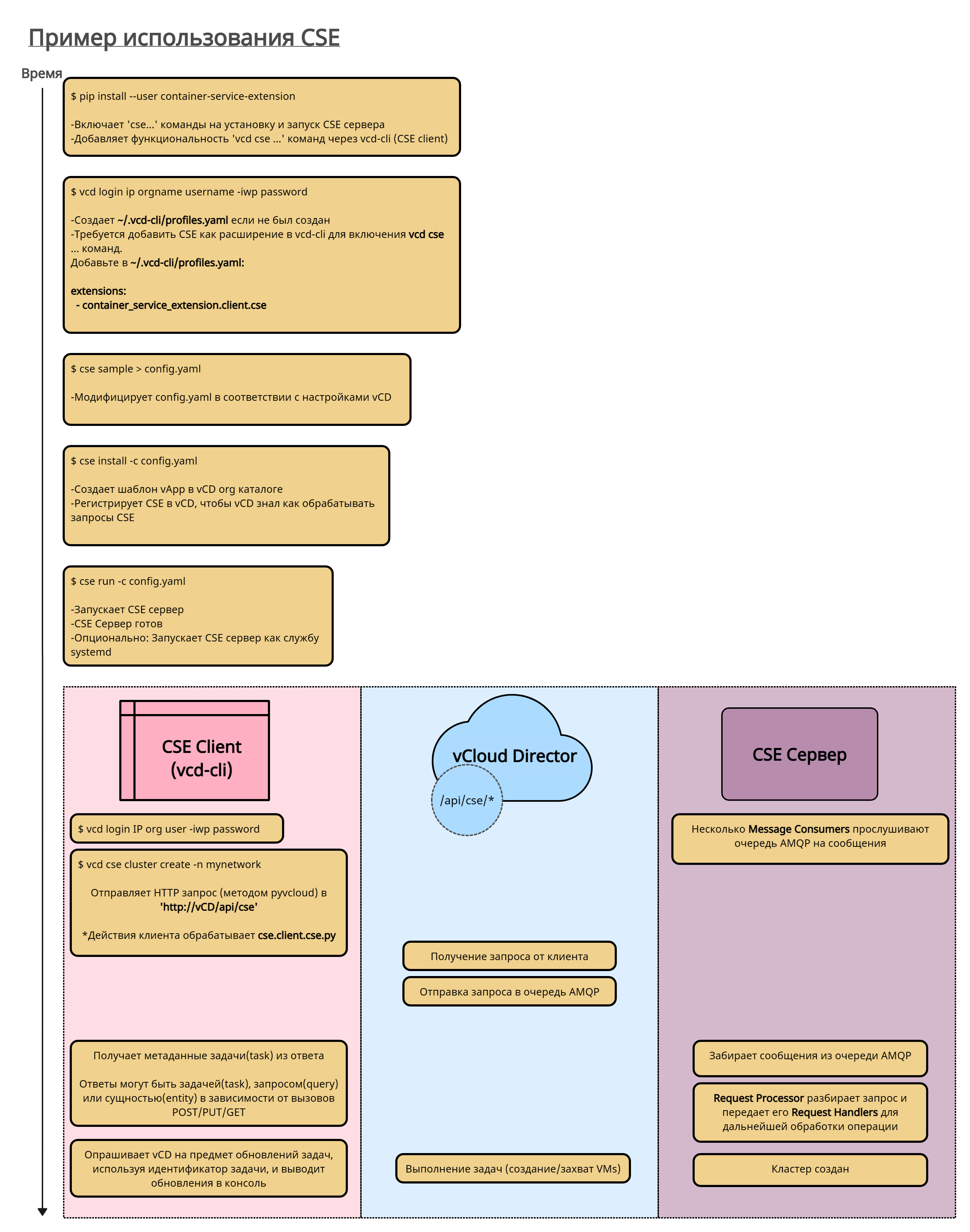

Here is an overview of the process a vOrg administrator must follow to install vcd cse and create a cluster. It includes some of the internal aspects of the CSE so that you can understand what goes on "behind the scenes".

Kubernetes CSE clusters can include persistent volumes mounted on NFS. Procedures for creating and managing NFS nodes can be found in the Managing NFS Nodes section.

Commands

vcd cse ... the commands are used by administrators and users in an organisation for the following:

- list templates

- get CSE server status

- create, list, info, delete clusters/nodes

Below is a summary of the commands available for viewing templates and managing clusters and nodes:

| Команда | API version 35.0 | API version <= 34.0 | Description |

|---|---|---|---|

vcd cse template list |

Yes | Yes | List of templates from which a Kubernetes cluster can be deployed. |

vcd cse cluster apply CLUSTER_CONFIG.YAML |

Yes | No | Create or upgrade a Kubernetes cluster. |

vcd cse cluster create CLUSTER_NAME |

No | Yes | Create a new Kubernetes cluster. |

vcd cse cluster create CLUSTER_NAME --enable-nfs |

Нет | Yes | Create a new Kubernetes cluster with NFS Persistent Volume support. |

vcd cse cluster list |

Yes | Yes | List of available Kubernetes clusters. |

vcd cse cluster info CLUSTER_NAME |

Yes | Yes | Get detailed information about a Kubernetes cluster. |

vcd cse cluster resize CLUSTER_NAME |

No | Yes | Increasing a Kubernetes cluster by adding new nodes. |

vcd cse cluster config CLUSTER_NAME |

Yes | Yes | Get the kubectl configuration file of a Kubernetes cluster. |

vcd cse cluster upgrade-plan CLUSTER_NAME |

Yes | Yes | Retrieve the allowed path for upgrading Kubernetes software on the custer. |

vcd cse cluster upgrade CLUSTER_NAME TEMPLATE_NAME TEMPLATE_REVISION |

Yes | Yes | Obtaining a valid path to update Kubernetes software on the cluster. |

vcd cse cluster delete CLUSTER_NAME |

Yes | Yes | Deleting a Kubernetes cluster. |

vcd cse cluster delete-nfs CLUSTER_NAME NFS_NODE_NAME |

Yes | Нет | Deleting the NFS node of a given Kubernetes cluster |

vcd cse node create CLUSTER_NAME --nodes n |

No | Yes | Add n nodes to the Kubernetes cluster. |

vcd cse node create CLUSTER_NAME --nodes n --enable-nfs |

No | Yes | Add an NFS node to a Kubernetes cluster. |

vcd cse node list CLUSTER_NAME |

No | Yes | List of nodes in the cluster. |

vcd cse node info CLUSTER_NAME NODE_NAME |

No | Yes | Get detailed information about a node in a Kubernetes cluster. |

vcd cse node delete CLUSTER_NAME NODE_NAME |

No | Yes | Remove nodes from the cluster. |

For CSE < 3.0, by default, the CSE Client will display the progress of a task until the task completes or fails. The --no-wait flag can be used to skip waiting for a task. The CSE Client will still display task information in the console, and the end user can choose to monitor task progress manually.

> vcd --no-wait cse cluster create CLUSTER_NAME --network intranet --ssh-key ~/.ssh/id_rsa.pub

# display the status and progress of the task

> vcd task wait 377e802d-f278-44e8-9282-7ab822017cbd

# list of currently running tasks in the organization

> vcd task list running

CSE 3.0 Cluster apply Command

vcd cse cluster apply <create_cluster.yaml>Takes a cluster specification file as input and applies it to a cluster resource. The cluster resource will be created if it does not exist.

- Examples of command usage:

vcd cse cluster apply <resize_cluster.yaml> (applies the specification to the specified resource; the cluster resource will be created if it does not exist). vcd cse cluster apply --sample --tkg (creates a sample specification file for tkg clusters). vcd cse cluster apply --sample --native (creates a sample specification file for standard clusters). - Sample input data specification file

# A brief description of the various properties used in this example cluster configuration # kind: Kubernetes cluster view. # # metadata: This is a mandatory section. # metadata.cluster_name: The name of the cluster to be created or resized. # metadata.org_name: The name of the organization where the cluster is to be created or managed. # metadata.ovdc_name: The vdc name of the organisation where the cluster is required to be created or managed. # # spec: Custom specifications of the desired state of the cluster.

# spec.control_plane: Optional subsection for the desired control-plane state of the cluster. The "sizing_class" and "storage_profile" properties can only be specified during the cluster creation phase. These properties will no longer be changed in subsequent upgrade operations such as "resize" and "upgrade". # spec.control_plane.count: The number of control plane nodes. A single control plane is supported. # spec.control_plane.sizing_class: The policy for determining the amount of processing power that a control-plane node should be provided with in a given "ovdc". The specified scaling policy is expected to be pre-published in this ovdc. # spec.control_plane.storage_profile: The storage profile with which the control-plane is to be provisioned in this "ovdc". The specified storage profile is expected to be available on this ovdc. # # spec.k8_distribution: This is a mandatory section. # spec.k8_distribution.template_name: Template name based on guest OS, Kubernetes version, and Weave software version. # spec.k8_distribution.template_revision: revision number # # spec.nfs: Optional subsection for the desired nfs cluster state. The "sizing_class" and "storage_profile" properties can only be specified during the cluster creation phase. These properties will no longer be changed in subsequent upgrade operations such as "resize" and "upgrade". # spec.nfs.count: Nfs nodes can only be incremented; they cannot be decremented. The default value is 0. # spec.nfs.sizing_class: The policy for determining the amount of compute resources that an nfs node should be provisioned in this "ovdc". The specified policy is expected to be pre-published in this ovdc. # spec.nfs.storage_profile: The storage profile with which nfs should be deployed in this "ovdc". The specified storage profile is expected to be available on this ovdc. # # spec.settings: This is a mandatory subsection. # spec.settings.network: This is a mandatory section. The network name of the organization’s virtual data centre. # spec.settings.rollback_on_failure: Optional value that defaults to true. For any cluster failure, if the value is set to true, the affected node VMs will be automatically deleted. # spec.settings.ssh_key: Optional ssh key that users can use to log in to node VMs without explicitly specifying passwords. # # spec.workers: Optional subsection for defining the state of the worker. The "sizing_class" and "storage_profile" properties can only be specified during the cluster creation phase. These properties will no longer be changed in subsequent update operations such as "resize" and "upgrade". Different vorker nodes in clusters are not supported. # spec.workers.count: the number of nodes of the workers (default value:1) The nodes of the workers can be incremented and decremented. # spec.workers.sizing_class: The policy for the computing power dimensionality with which workers should be provisioned in this "ovdc". The specified policy is expected to be pre-published for this ovdc. # spec.workers.storage_profile: The storage profile with which worker nodes should be provisioned in this "ovdc". The specified profile is expected to be available on this ovdc. # # status: The current state of the cluster on the server. This section is not required for any of the operations. api_version: '' kind: native metadata: cluster_name: cluster_name org_name: organization_name ovdc_name: org_virtual_datacenter_name spec: control_plane: count: 1 sizing_class: Large_sizing_policy_name storage_profile: Gold_storage_profile_name expose: false k8_distribution: template_name: ubuntu-16.04_k8-1.17_weave-2.6.0 template_revision: 2 nfs: count: 1 sizing_class: Large_sizing_policy_name storage_profile: Platinum_storage_profile_name settings: network: ovdc_network_name rollback_on_failure: true ssh_key: null workers: count: 2 sizing_class: Medium_sizing_policy_name storage_profile: Silver_storage_profile status: cni: null exposed: False docker_version: null kubernetes: null nodes: null os: null phase: null task_href: null

- Examples of command usage:

Updating software installed on Kubernetes clusters

Kubernetes is a rapidly evolving software, with a new minor release every three months and numerous patches (including security patches) in between those minor releases. To keep already deployed clusters up to date, CSE 2.6.0 added support for on-premises software upgrades for Kubernetes clusters. Software that can be upgraded to new versions:

- kubernetes components including kube-server, kubelet, kubedns etc.

- Weave (CNI)

- Docker engine

The update matrix is built based on CSE's own templates (read more about them here). The template originally used to deploy the cluster defines the valid target templates for updates. Supported upgrade paths can be discovered using the following command

vcd cse cluster upgrade-plan 'mycluster'

Suppose our cluster was deployed using a T1 template that is based on Kubernetes version x.y.z. Our potential upgrade templates will fulfil at least one of the following criteria:

- The latest revision of the T1 template based on the Kubernetes version of the template

x.y.w, wherew>z. - The T2 template, which has the same underlying OS and is based on the Kubernetes distribution

x.(y+1).v, wherevcan be any.

If you don’t see a desired target template for upgrading your cluster, please feel free to file a GitHub issue .

You can update the cluster with the following command.

vcd cse cluster upgrade 'mycluster'

The upgrade process requires almost zero downtime if the following conditions are met:

- Docker is not updated.

- Weave (CNI) is not updated.

- Kubernetes version update is limited to patch version only.

If any of the above conditions are not met, the cluster will stop for about a minute or more (depends on the actual update process).

Automation

vcd cse commands can be written to automate the creation and operation of Kubernetes clusters and nodes.

Users can interact with the CSE through the Python package (container-service-extension) or through the CSE's REST API provided through VCD.

The following Python script creates a Kubernetes cluster on vCloud Director:

#!/usr/bin/env python3

from pyvcloud.vcd.client import BasicLoginCredentials

from pyvcloud.vcd.client import Client

from container_service_extension.client.cluster import Cluster

client = Client('vcd.mysp.com')

client.set_credentials(BasicLoginCredentials('usr1', 'org1', '******'))

cse = Cluster(client)

result= cse.create_cluster('vdc1', 'net1', 'cluster1')

task = client.get_resource(result['task_href'])

task = client.get_task_monitor().wait_for_status(task)

print(task.get('status'))

client.logout()

Usage Examples

Note that some of the commands are version dependent. Not all commands are applicable to all versions of CSE. Please refer to to the CLI commands for each version of CSE

# [CSE 3.0] create cluster

> vcd cse cluster apply create_cluster.yaml

# [CSE 3.0] resize the cluster

> vcd cse cluster apply resize_cluster.yaml

# [CSE 3.0] scaling nfs nodes in a given cluster

> vcd cse cluster apply scale_up_nfs.yaml

# [CSE 3.0] Deleting an Nfs node in a given cluster

> vcd cse cluster delete-nfs mycluster nfsd-ghyt

# create a mycluster cluster with one control plane and two nodes connected to the provided network

# a public key is provided for logging into virtual machines using the ssh protocol

> vcd cse cluster create mycluster --network intranet --ssh-key ~/.ssh/id_rsa.pub

# node cluster worker list

> vcd cse node list mycluster

# create a mycluster cluster with one control plane, three nodes and connected to the provided network

> vcd cse cluster create mycluster --network intranet --nodes 3 --ssh-key ~/.ssh/id_rsa.pub

# создать кластер с одним узлом воркера, подключенным к указанной сети. Узлы могут быть добавлены позже

> vcd cse cluster create mycluster --network intranet --nodes 0 --ssh-key ~/.ssh/id_rsa.pub

# create a mycluster cluster with one control plane, three worker nodes connected to the provided network

# and one node like an NFS server

> vcd cse cluster create mycluster --network intranet --nodes 3 --ssh-key ~/.ssh/id_rsa.pub --enable-nfs

# add 2 wokers to a cluster with 4 GB of RAM and 4 processors each, from the photon template,

# using the specified storage profile

> vcd cse node create mycluster --nodes 2 --network intranet --ssh-key ~/.ssh/id_rsa.pub --memory 4096 --cpu 4 --template-name sample_photon_template --template-revision 1 --storage-profile sample_storage_profile

# add 1 nfsd node to a cluster with 4 GB RAM and 4 processors each, from the photon template,

# using the specified storage profile

> vcd cse node create mycluster --nodes 1 --type nfsd --network intranet --ssh-key ~/.ssh/id_rsa.pub --memory 4096 --cpu 4 --template-name sample_photon_template --template-revision 1 --storage-profile sample_storage_profile

# resize the cluster to have 8 workers. If resizing fails, the cluster returns to the original size.

# 'is only applicable for clusters using Kubernetes native (VCD) provider.

> vcd cse cluster resize mycluster --network mynetwork --nodes 8

# information about this node. If the node is of type nfsd, export information is displayed.

> vcd cse node info mycluster nfsd-dj3s

# remove 2 nodes from the cluster

> vcd cse node delete mycluster node-dj3s node-dj3s --yes

# list of available clusters

> vcd cse cluster list

# information about this cluster

> vcd cse cluster info

# get the cluster configuration

> vcd cse cluster config mycluster > ~/.kube/config

# check the cluster configuration

> kubectl get nodes

# expand sample application

> kubectl create namespace sock-shop

> kubectl apply -n sock-shop -f "https://github.com/microservices-demo/microservices-demo/blob/master/deploy/kubernetes/complete-demo.yaml?raw=true"

# check that all pods are running and ready for use

> kubectl get pods --namespace sock-shop

# application access

> IP=`vcd cse cluster list|grep '\ mycluster'|cut -f 1 -d ' '`

> open "http://${IP}:30001"

# delete the cluster if it is no longer needed

> vcd cse cluster delete mycluster --yes

Creating clusters in organisations with routed OrgVDC networks

Typically, CSE requires direct connectivity to the OrgVDC network to deploy a cluster of K8s. This is to ensure that the cluster virtual machines are accessible from outside the OrgVDC network. In NSX-T, direct-connect OrgVDC networks are not offered and routed OrgVDC networks are used to deploy K8s clusters. To provide Internet access to cluster virtual machines connected to NSX-T-enabled routed OrgVDC networks and retain cluster access, CSE 3.0.2 offers the expose cluster option.

It is a prerequisite that the network is configured to accept external traffic and send traffic outward.

Users deploying clusters must have the following permissions if they want to use the expose functionality.

- Gateway View

- NAT View Only

- NAT Configure

If at least one of these rights is missing, the CSE will ignore the request to expose the K8s cluster.

The user can apply expose to their K8s cluster during the first vcd cse cluster apply command by specifying expose : True in the spec section of the cluster specification file. Note that any attempt to expose after the cluster has been created will be ignored by CSE. Once the cluster is published, a new exposed field will appear in the status section of the cluster and will be set to True

Users can unpublish a cluster by setting the expose field to False and applying the updated specification to the cluster using vcd cse cluster apply. The value of exposed field will be False for clusters that are not published. An exposed cluster, if it has been hidden, cannot be reopened.